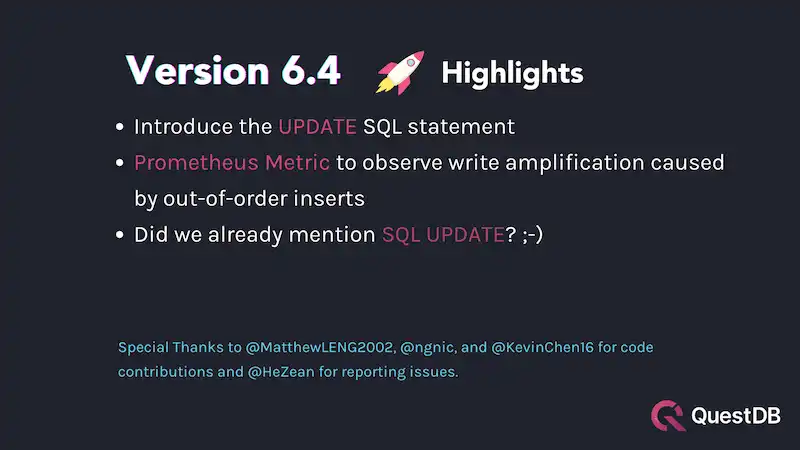

We just released QuestDB 6.4. A new release is always exciting! However, QuestDB 6.4 is a special release. Why? It is introducing one of the most awaited features: SQL UPDATE! You read it right, QuestDB now allows updating already written data.

Why does UPDATE matter?

One could argue: Time-series data should be immutable. After all, these are records of historical facts, and history is immutable. While I can agree with this sentiment, there are valid use-cases for updating time-series data. Imagine an IoT application that records sensor data in QuestDB and uses it for various analytics. It sounds like a typical append-only-never-mutate use case.

What happens when a sensor breaks down and feeds bad data into QuestDB? It may badly skew the resulting analytics! How do you correct bad data? Even without UPDATE you have some options. For example, you could maintain another table with records of broken sensors and use it to exclude data from analytics. While this sounds nice in theory - and there is a certain elegance in how it fits into the append-only world - it complicates our data model. It forces us to consult (join) the other table whenever querying sensor data. It results in more complicated queries and the join also negatively impacts performance. There are other options to solve this without UPDATE, but they all feel like a workaround.

How do you solve the broken-sensor problem with updates? You have 2 options:

- Repairing bad data

- Excluding bad data

The first option is best when you know what the right values should be. Imagine our hypothetical sensor measures temperature and someone has accidentally switched it to generate data in Fahrenheit while it should have been in Celsius. Fixing such data is trivial. The SQL could look like this:

UPDATE sensor_data

SET temperature = (temperature - 32) * 5.0 / 9

WHERE (measurement_timestamp between X and Y) and (sensor_id = Z)

Data exclusion is applicable in cases where you have no means of correcting the data and you want to simply exclude them from analytics. Imagine a temperature sensor which starts to generate completely random values. There is no way to repair such data and the best you can do is to exclude the bad data from all analytics. This could be done e.g. by having a column “isValid” which would be true by default and when you detect a broken sensor then you run a query like this:

UPDATE temperature_table

SET isValid = false

WHERE (measurement_timestamp between X and Y) and (sensor_id = Z)

This allows analytical queries to exclude such data, without introducing additional complexity due to extra joins.

Does it mean QuestDB can be used for OLTP use-cases?

As I’ve shown above the UPDATE greatly simplifies some use-cases. The internal design was driven by this principle: Updates cannot slow down other paths. QuestDB is known for awesome ingestion and query performance and we cannot compromise on that for the sake of UPDATEs. We are also assuming UPDATEs in time series are rare. Hence we decided that whoever is executing the update should pay the performance price. All this means UPDATEs are relatively costly and this feature won’t magically turn QuestDB into an OLTP database. We don’t want to become yet another Postgres or MySQL. Our mission is to build the best time-series database for real-time analytics and the design of this feature reflects it. If you are interested in more in-depth design then check out our documentation.

Extra noteworthy feature: Write amplification metrics

Out of order inserts can cause write amplification. When you insert a row with a timestamp earlier than what was already committed, QuestDB merges existing data with the new row. In the worst case, a single row insertion will copy the entire table partition. QuestDB has various parameters to tune this behaviour; Commit Lag is the most important parameter. To do any meaningful tuning you need to understand your data. At the very least you need to know how frequently Out of order events occur and how much data was written as a result of the merge process. QuestDB 6.4 is adding Out of order related metrics to the already existing metrics you can consume via Prometheus.

What’s next?

There are 2 major items ahead of us: Data replication and QuestDB Cloud. Replication is part of our story to accommodate more data, execute queries faster and provide fault tolerance. QuestDB is already used for critical applications, but as of QuestDB 6.4, it offers little support for fault tolerance. Our users design their own solutions. For example, some of them rely on container orchestration, but we know we can do better. We would like to simplify this process and offer fault tolerance as a first-class feature. Data replication is a prerequisite for that. Data replication will also serve as a foundation for horizontal scaling which will allow us to escape the capacity limitation of a single box.

Data replication will simplify the operational aspect of QuestDB. It will allow everyone to run a highly available QuestDB both in Cloud and on-prem. However, we would like to do more. What if you like QuestDB capabilities, but you don’t want to run it? If you are like that then you will love to hear we are building a managed version of QuestDB. It’ll allow you to consume QuestDB as a service and we will cover the operational aspect of it. The service is currently being rolled out. Give us a shout if it sounds interesting to you.

Words of appreciation

QuestDB is an open-source project. For us, it means more than just the Apache License. We see it as a way to connect with our community. It gives all QuestDB users a chance to dig into source code and improve it. We love all contributors, but in this blog I’d like to cherry-pick 2 people who made their first contributors:

- MatthewLENG2002 made our REST API more robust and predictable.

- Ngnic added a GitHub action to check Pull Requests have the right format.

Thank you.

If you would like to start contributing to QuestDB then join QuestDB Slack, Say Hi and I’m sure we can find a way for you to improve QuestDB. Did I mention we ❤️ getting GitHub stars? ;-)

And last but not least: We are hiring!