There are many ways to observe a system.

QuestDB offers Prometheus-compatible metrics out of the box. We have detailed guides on setting up logs and alerts in our documentation and a comprehensive post on using QuestDB with Prometheus.

However, there are times when you need something quick and straightforward and

don't need a third-party tool. In this post, we'll explore some useful curl

commands and SQL queries for monitoring the load and state of your QuestDB

instance. These commands are designed to output a single number.

Note that all examples can be tested against our public demo instance. They just might not return interesting values!

Monitoring concurrent SQL queries

You can monitor the number of concurrent SQL queries running on your QuestDB instance using the following command:

curl -G https://demo.questdb.io/exp \

--data-urlencode 'query=select count(*)-1 from query_activity();' \

-d 'nm=true'

This command subtracts 1 from the total count to exclude the query used to fetch the data itself.

Monitoring WAL lag

As of version 7.3.10, the default table type is WAL tables. These tables ensure that data modifications are first recorded in a log before being applied to the database.

WAL tables permit concurrent data ingestion from more than one interface, enable simultaneous modification of data, and allow schema changes without locking the entire table. They also allow sequential disk writes that are faster than random ones.

Without WAL tables, features like deduplication and the handling of out-of-order data would be much more difficult, if not impossible.

We can get a breakdown of a WAL table's state with the wal_tables(); function:

wal_tables()

It'll return a table like so, which breaks down WAL services in sequence:

| name | suspended | writerTxn | writerLagTxnCount | sequencerTxn | errorTag | errorMessage | memoryPressure |

|---|---|---|---|---|---|---|---|

| trips | false | 0 | 0 | 0 | 0 | ||

| AAPL_orderbook | false | 343 | 0 | 343 | 0 | ||

| trades | false | 23864847 | 0 | 23864847 | 0 | ||

| ethblocks_json | false | 2923 | 0 | 2923 | 0 |

As part of their operation, WAL tables decouple the transaction commit from the disk write process. This decoupling of operations introduces what's known as "WAL lag":

WAL lag in QuestDB is the delay between when data is written to the Write-Ahead Log (WAL) and when it is fully committed and applied to the main database tables. Knowing the lag time will indicate precisely how long before an ingested item is available to queries.

To monitor the Write-Ahead Log (WAL) lag, use the following command:

curl -G https://demo.questdb.io/exp \

--data-urlencode 'query=select sum(writerLagTxnCount) from wal_tables();' \

-d 'nm=true'

This command sums up the transaction lag counts from all WAL tables, giving you an overall view of the WAL lag.

Monitoring suspended tables

Sometimes a table may get suspended due to a full disk or to kernel limits. As one might assume, there are risks associated with the suspended state. For example, there is risk of WAL segment corruption. To compound matters, multiple transactions might rely on the corrupted segment, making it difficult to find the transaction number needed to resume operations.

So, it's important to know as soon as possible when tables are suspended.

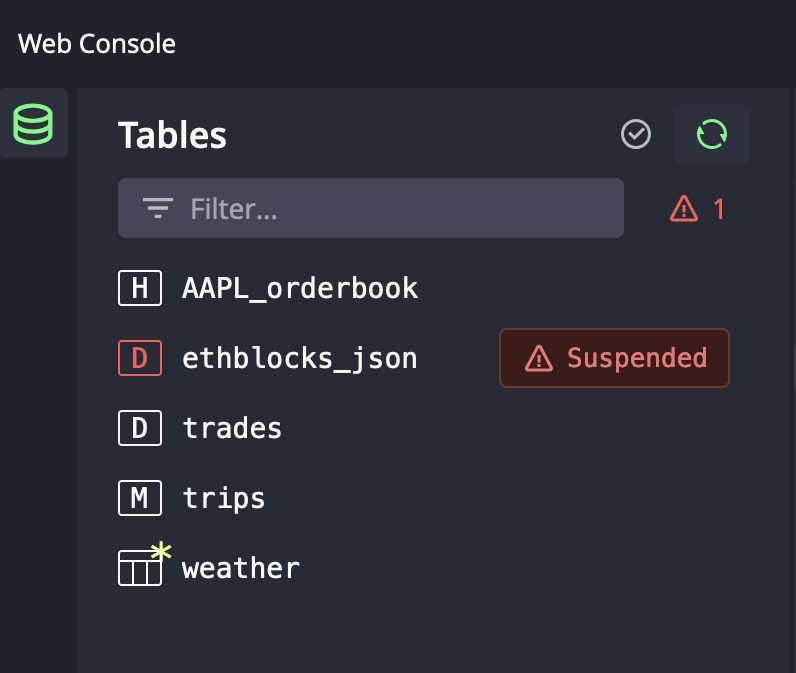

If you're using the Web Console, you can look under the Tables list:

Suspended tables will show up in red and provide helpful debugging information.

However, to check for any suspended tables in your QuestDB instance programmatically, run:

curl -G https://demo.questdb.io/exp \

--data-urlencode 'query=select count(*) from wal_tables() where suspended;' \

-d 'nm=true'

This will return the number of suspended tables, which can help you identify potential issues.

Querying table storage stats

You might also be interested in table storage statistics. Here's a query that return the partition count, row count, and disk size for each table, along with totals:

WITH t AS (

SELECT * FROM table_storage

)

SELECT

tableName,

partitionCount,

rowCount,

diskSize

FROM t

UNION

SELECT

'**all_tables**',

SUM(partitionCount),

SUM(rowCount),

SUM(diskSize)

FROM t;

Partition row counts

To analyze the number of rows in the most recent partitions compared to average counts over previous partitions, you can use:

WITH t AS (

SELECT *

FROM table_partitions('your_table')

LIMIT 100

)

SELECT

name,

partitionBy,

numRows,

AVG(numRows) OVER (ORDER BY name ASC ROWS 5 PRECEDING EXCLUDE CURRENT ROW) AS avg5,

AVG(numRows) OVER (ORDER BY name ASC ROWS 30 PRECEDING EXCLUDE CURRENT ROW) AS avg30

FROM t

ORDER BY name DESC;

This query helps you detect anomalies in data ingestion patterns by comparing recent partitions with historical averages.

In this example, we compared each partition with the average of the previous 5 and 30 partitions, which corresponds to 5 and 30 hours for hourly partitioning.

If your data is partitioned differently — such as daily, weekly, monthly, or yearly — you can adjust these numbers to match your partitioning scheme:

-

Daily: Use

ROWS 7 PRECEDINGandROWS 30 PRECEDINGto compare the last week's and month's data. -

Weekly: Use

ROWS 4 PRECEDINGandROWS 12 PRECEDINGto represent the last month and quarter. -

Monthly: Use

ROWS 12 PRECEDINGto compare against the past year. -

Yearly: Adjust accordingly based on the number of years you want to include in your comparison.

This will help you adjust the query to reflect your actual needs.

Securing monitoring endpoints

When exposing monitoring endpoints, it is important to prevent unauthorized access. With QuestDB, we can apply basic authentication. When enabled for the Web Console, it will also secure the monitoring endpoints accessed via HTTP.

Once enabled basic authentication, any HTTP requests to the Web Console or monitoring endpoints will require authentication via a username and password.

You can control authentication for health checks and monitoring endpoints using

the QDB_HTTP_HEALTH_CHECK_AUTHENTICATION_REQUIRED configuration parameter:

QDB_HTTP_HEALTH_CHECK_AUTHENTICATION_REQUIRED=true

-

true: Endpoints require auth for health checks and monitoring. -

false: Endpoints remain accessible without authentication.

By configuring authentication, you can keep your system details safe.

Conclusion

These curl commands and SQL queries offer a straightforward way to monitor

various aspects of your QuestDB instance. By securing your monitoring setup with

basic authentication and appropriate configuration, you can ensure that

sensitive monitoring data remains protected.

To learn more, check out our GitHub repository, try the demo, or join the conversation on Slack.

Thanks for reading!