It was a little over a year and a half into my tenure as a cloud engineer at QuestDB when I started my first Pull Request to the core database. Before that, I had spent my time working with tools like Kubernetes and Docker to manage QuestDB deployments across multiple datacenters. I implemented production-grade observability solutions, wrote a Kubernetes operator in Golang, and pored over seemingly minute details our AWS bills.

While I enjoyed the cloud-native work (and still do!), I continued to have a nagging desire to meaningfully contribute the actual database that I spent all day orchestrating. It took the birth of my daughter, and the accompanying parental leave, for me to disconnect a bit and think about my priorities and career goals.

What was really stopping me from contributing? Was it the dreaded imposter syndrome? Or just a backlog of cloud-related tasks on my plate? After all, QuestDB is open source, so not much was stopping me from submitting some code changes.

With this mindset, I had a meeting with Vlad, our CTO, as I was ending my leave and about to start ramping my workload back up.

Config Hot-Reloading

Since I was coming back to work part-time for a bit, I figured that I could pick up a project that wasn't particularly time-sensitive so I could continue to help out with the baby at home.

One item that came up was the ability for QuestDB to adjust its runtime configuration on-the-fly. To do so, we'd need to monitor the config file, server.conf, and apply any configuration changes to the database without restarting it.

This task immediately resonated with me, since I've personally felt the pain of not having this "hot-reload" feature. I've spent way too many hours writing Kubernetes operator code that restarts a running QuestDB Pod on a mounted ConfigMap change. Having the opportunity to build a hot-reload feature was tantalizing, to say the least.

So I was off to the races, excited to get started working on my first major contribution to QuestDB.

We quickly arrived at a basic design.

-

Build a new FileWatcher class: Monitor the database's server.conf file for changes.

-

Detect changes: FileWatcher detects changes to the server.conf file.

-

Load new values: Read the server.conf and load any new configuration values from the updated file.

-

Validate the updated configuration: Validate the new server configuration.

-

Apply the new configuration: Apply the new configuration values to the running server without restarting it.

Seems easy enough!

But like in most production-grade code, this seemingly simple problem got quite complex very quickly...

Complications

It's one thing to add a new feature to a relatively greenfield codebase. But it's quite another to add one to a mature codebase with over 100 contributors and years of history. Not all of these challenges were evident at the start, but over time, I started to internalize them.

- The

FileWatchercomponent needed to be cross-platform, since QuestDB supports Linux, macOS, and Windows. - The new reloading server config (called

DynamicServerConfig) had to slot-in seamlessly to the existing plumbing that runs QuestDB and allows QuestDB Enterprise to plug in to the open core. - We wanted the experience to be as seamless as possible for end users. This means that we couldn't forcibly close open database connections or restart the entire server.

- Caching configuration values was much more common throughout the codebase than we initially thought. Many classes and factories read the server configuration only once on initialization, and would need to be re-initialized to accommodate a new config setting.

- Like everything we do at QuestDB, performance is paramount. The solution needed to be as efficient as possible to allocate compute resources to more important things, like ingesting and querying data.

Inotify, kqueue, epoll, oh my!

Nine times out of ten, if you ask me to write a cross-platform file watcher library, I would google for "cross-platform filewatcher in Java". But working on a codebase that values strict memory accounting and efficient resource usage, it just didn't feel right to pull in a 3rd party library off the shelf. To maintain the performance that QuestDB is known for, it's crucial to understand what every bit of code is doing under the hood. So, in spite of the famous "Not Invented Here Syndrome", I went about learning how to implement file watchers at the syscall level in C.

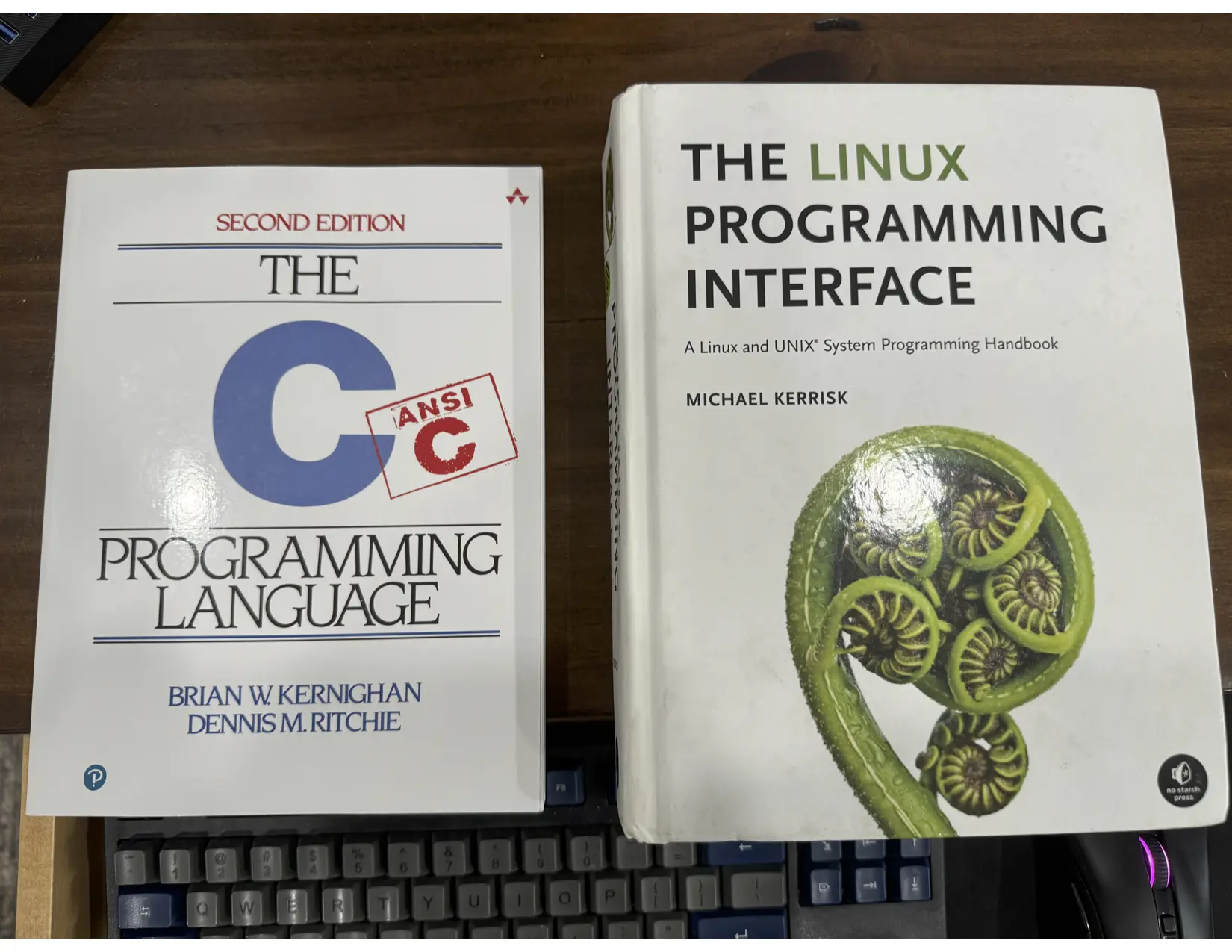

I've felt this way a few times in my career, picking up something so brand new that I wasn't even sure where to start. And during these times, I've reached for venerable and canonical books on the subject to learn the basics. So, I hit up Amazon and got some reading material.

Since I'd recently been coding on my fancy new Ryzen Zen 4-based EndeavourOS desktop, I decided to start with the Linux implementation. I began writing some primitive C code, working with APIs like inotify and epoll, to effectively park a thread and wait for a change in a specific file or directory. Once a change was detected by one of these lower-level libraries, execution would continue where I would perform some basic filtering for a particular filename and return.

Once I was happy with my implementation, I still needed to make the code available to the JVM, where QuestDB runs. I was able to use the Java Native Interface (JNI) to wrap my functions in macros that allow the JVM to load the compiled binary and call them directly from Java.

But this was only the start. I also needed to make this work for macOS and

Windows. Unfortunately, inotify isn't included in either of those operating

systems, so I needed to find an alternative. Since macOS is built on top of

FreeBSD, they share many of the same core libraries. This includes

kqueue, which I was able to use

instead of inotify to implement the core functionality of my filewatcher.

Luckily, QuestDB already has some kqueue code written, since we use it to

handle network traffic on those platforms. So I only had to add a few new

functions in C to add the functionality that I required.

As for Windows? Vlad was a lifesaver there, since I don't have a Windows machine! He used low-level WinAPI libraries to implement the filewatcher and made them available to QuestDB through the JNI.

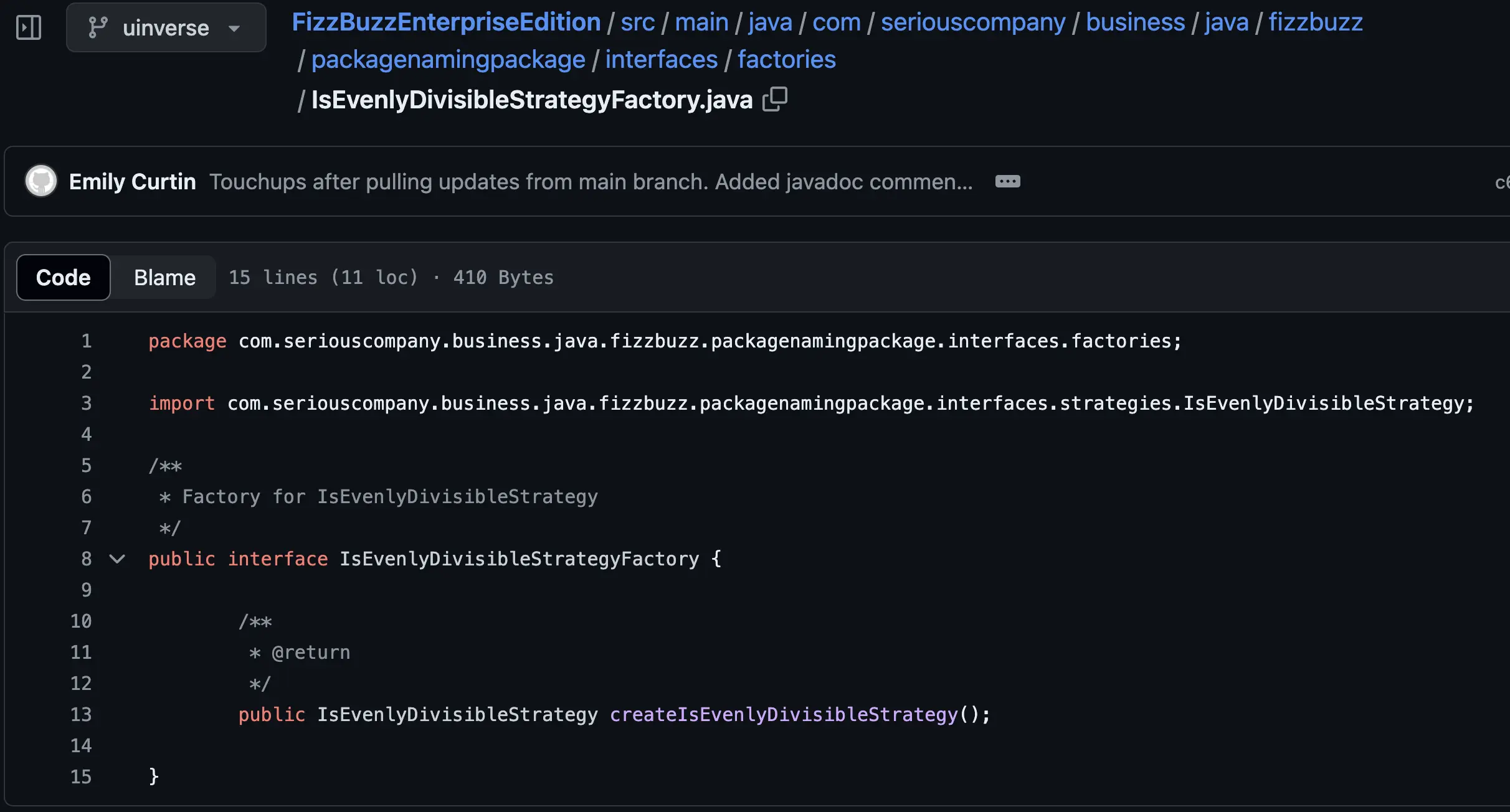

Navigating plumbing like Mario and Luigi

When I first started reading the QuestDB codebase, I found a web of classes and

interfaces with abstract names like FactoryProviderFactory and

PropBootstrapConfiguration. Was this that "enterprise Java"-style of

programming that I've heard so much about?

After a lot of F12 and Opt+Shift+F12 in IntelliJ, I started to build a

mental map of the project structure and things started to make more sense. At

its core, the entrypoint is a linear process. We use Java's built-in

Properties

to read server.conf into a property of a BootstrapConfiguration, pass that

in a constructor to a Bootstrap class, and use that as an input to

ServerMain, QuestDB's entrypoint.

The reason for so many factories, interfaces, and abstract classes is twofold.

- it allows devs to mock just about any dependency in unit tests

- it creates abstraction layers for QuestDB Enterprise to use and extend existing core components

Now, I was ready to make some changes! I added a new

DynamicServerConfiguration interface that exposed a reload() method, and

created an implementation of this class that used the delegate pattern to wrap a

legacy ServerConfiguration interface. When reload() was called, we would

read the server.conf file, validate it, and atomically swap the delegate

config with the new version. I then created an instance of my FileWatcher in the

main QuestDB entrypoint with a callback that called

DynamicServerConfiguration.reload() when it was triggered (on a file change).

As you can imagine, wiring this all up wasn't the easiest, since I needed to maintain the existing class initialization order so that all dependencies would be ready at the correct time. I also didn't want to significantly modify the entrypoint of QuestDB. I felt this would not only confuse developers, but also cause problems when trying to compile Enterprise Edition.

Vlad had some great advice for me here, paraphrasing, "Make a change and re-run unit tests. If you've broken 100s, then try a different way. If you've only broken around 5, then you're on the right track."

Now, what can we actually reload?

There are a lot of possible settings to change in QuestDB, at all different

levels of the database. At first, we thought that something like hot-reloading a

query timeout would be a nice feature to have. This way, if I find that a

specific query is taking a long to execute, I can simply modify my server.conf

without having to restart the database.

Unfortunately, query timeouts are cached deep inside the cairo query engine, and

updating those components to read directly from the DynamicServerConfiguration

would be an exercise in futility.

After a lot of poking and prodding of the codebase, we found something that

would work, pgwire credentials! QuestDB supports configurable read/write and

readonly users that are used to secure communication with the database over

Postgres wire protocol. We validate these users' credentials with a class that

reads them from a ServerConfiguration and stores them in a custom (optimized)

utf8 sink.

I was able to modify this class to accept my new dynamic configuration, cache it, and check whether the config reference has changed from the previous call. Because the dynamic configuration uses the delegate pattern, after a successful configuration reload (where we re-initialize the delegate), a new configuration would have a different memory address, and the cached reference would not match. At this point, the class would know to update its username & password sinks with the newly-updated config values.

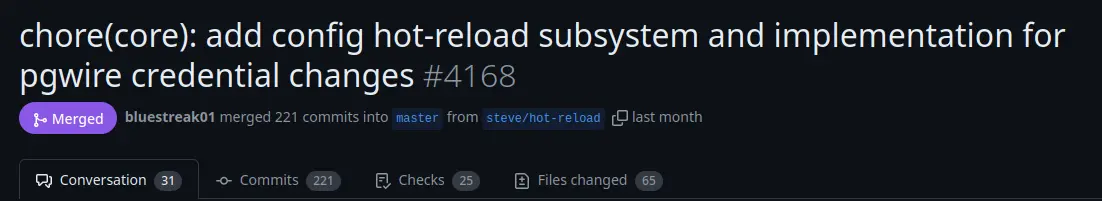

The big moment, ready to merge!

It takes a village to raise a child. I've learned that already in my short time as a father. And a Pull Request is no different. Both Vlad and Jaromir helped to get this thing over the finish line. From acting as a soundboard to getting their hands dirty in Java and C code, they really provided fantastic support over the 5 months that my PR was open.

Towards the end of the project, even though all tests were passing in core, there was even a wrinkle in QuestDB Enterprise that prevented us from merging the PR. We realized that our abstraction layers were not quite perfect, so we couldn't reuse some parts of the core codebase in Enterprise. Instead of re-architecting everything from scratch and probably adding weeks or more to the project, we ended up just copying a few lines from core into Enterprise. It compiled, tests passed, and everyone was happy.

Now that Enterprise and core were both ready to go with a green check mark on GitHub, I hit the "Merge" button on GitHub and went outside for a long walk.

Learnings

While this ended up being an incredibly long journey to "simply" let users change pgwire credentials on-the-fly, I consider it to be a massive personal success in my growth and development as a software engineer. The amount of confidence that this task has given me cannot be understated. From this project alone, I've:

- written my own C code for the first time

- learned several new kernel APIs

- used unsafe semantics in a memory-managed programming language

- navigated the inner workings of a massive, mature codebase

And all in a new IDE for me (IntelliJ)!

With confidence stemming from the breadth and depth of work in this project, I'm ready to take on my next challenge in the core QuestDB codebase. I've already implemented a few simple SQL functions and started to grok the SQL Expression Parser. But given our aggressive roadmap with features like Parquet support, Array data types, and an Apache Arrow ADBC driver, I'm sure that there are plenty of other things for me to contribute in the future! What's even more exciting is that I can use my cloud-native expertise to help drive the database forward as we move towards a fully distributed architecture.

If you're curious about all of this work, here's a link to the PR

And finally, if you find this type of programming rewarding, we're currently hiring Core Database Engineers.