Query & SQL Overview

Querying - as a base action - is performed in three primary ways:

- Query via the QuestDB Web Console

- Query via PostgreSQL

- Query via REST HTTP API

- Query via Apache Parquet

For efficient and clear querying, QuestDB provides SQL with enhanced time series extensions. This makes analyzing, downsampling, processing and reading time series data an intuitive and flexible experience.

Queries can be written into many applications using existing drivers and clients of the PostgreSQL or REST-ful ecosystems. However, querying is also leveraged heavily by third-party tools to provide visualizations, such as within Grafana, or for connectivity into broad data infrastructure and application environments such as with a tool like Cube.

Need to ingest data first? Checkout our Ingestion overview.

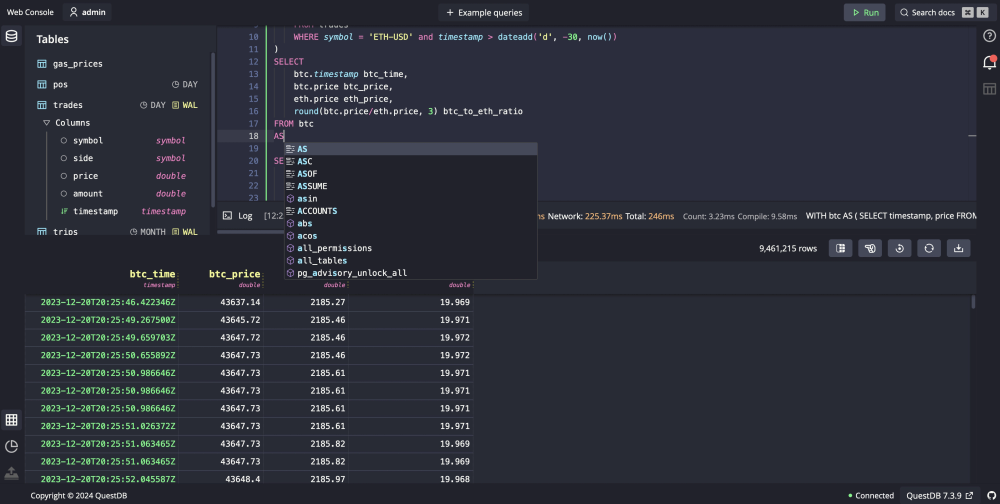

QuestDB Web Console

The Web Console is available by default at localhost:9000. The GUI makes it easy to write, return and chart queries. There is autocomplete, syntax highlighting, errors, and more. If you want to test a query or interact direclty with your data in the cleanest and simplest way, apply queries via the Web Console.

For an example, click Demo this query in the below snippet. This will run a query within our public demo instance and Web Console:

SELECT

timestamp, symbol,

first(price) AS open,

last(price) AS close,

min(price),

max(price),

sum(amount) AS volume

FROM trades

WHERE timestamp > dateadd('d', -1, now())

SAMPLE BY 15m;

If you see Demo this query on other snippets in this docs, they can be run against the demo instance.

PostgreSQL

Query QuestDB using the PostgreSQL endpoint via the default port 8812.

Examples in multiple languages are shown below.

- Python

- Java

- NodeJS

- Go

- C#

- C

- Ruby

- PHP

import psycopg as pg

import time

# Connect to an existing QuestDB instance

conn_str = 'user=admin password=quest host=127.0.0.1 port=8812 dbname=qdb'

with pg.connect(conn_str, autocommit=True) as connection:

# Open a cursor to perform database operations

with connection.cursor() as cur:

#Query the database and obtain data as Python objects.

cur.execute('SELECT * FROM trades_pg;')

records = cur.fetchall()

for row in records:

print(row)

# the connection is now closed

package com.myco;

import java.sql.*;

import java.util.Properties;

public class App {

public static void main(String[] args) throws SQLException {

Properties properties = new Properties();

properties.setProperty("user", "admin");

properties.setProperty("password", "quest");

properties.setProperty("sslmode", "disable");

final Connection connection = DriverManager.getConnection(

"jdbc:postgresql://localhost:8812/qdb", properties);

try (PreparedStatement preparedStatement = connection.prepareStatement(

"SELECT x FROM long_sequence(5);")) {

try (ResultSet rs = preparedStatement.executeQuery()) {

while (rs.next()) {

System.out.println(rs.getLong(1));

}

}

}

connection.close();

}

}

"use strict"

const { Client } = require("pg")

const start = async () => {

const client = new Client({

database: "qdb",

host: "127.0.0.1",

password: "quest",

port: 8812,

user: "admin",

})

await client.connect()

const res = await client.query("SELECT x FROM long_sequence(5);")

console.log(res.rows)

await client.end()

}

start().catch(console.error)

package main

import (

"database/sql"

"fmt"

_ "github.com/lib/pq"

)

const (

host = "localhost"

port = 8812

user = "admin"

password = "quest"

dbname = "qdb"

)

func main() {

connStr := fmt.Sprintf(

"host=%s port=%d user=%s password=%s dbname=%s sslmode=disable",

host, port, user, password, dbname)

db, err := sql.Open("postgres", connStr)

checkErr(err)

defer db.Close()

stmt, err := db.Prepare("SELECT x FROM long_sequence(5);")

checkErr(err)

defer stmt.Close()

rows, err := stmt.Query()

checkErr(err)

defer rows.Close()

var num string

for rows.Next() {

err = rows.Scan(&num)

checkErr(err)

fmt.Println(num)

}

err = rows.Err()

checkErr(err)

}

func checkErr(err error) {

if err != nil {

panic(err)

}

}

// compile with

// g++ libpq_example.c -o libpq_example.exe -I pgsql\include -L dev\pgsql\lib

// -std=c++17 -lpthread -lpq

#include <libpq-fe.h>

#include <stdio.h>

#include <stdlib.h>

void do_exit(PGconn *conn) {

PQfinish(conn);

exit(1);

}

int main() {

PGconn *conn = PQconnectdb(

"host=localhost user=admin password=quest port=8812 dbname=testdb");

if (PQstatus(conn) == CONNECTION_BAD) {

fprintf(stderr, "Connection to database failed: %s\n",

PQerrorMessage(conn));

do_exit(conn);

}

PGresult *res = PQexec(conn, "SELECT x FROM long_sequence(5);");

if (PQresultStatus(res) != PGRES_TUPLES_OK) {

printf("No data retrieved\n");

PQclear(res);

do_exit(conn);

}

int rows = PQntuples(res);

for (int i = 0; i < rows; i++) {

printf("%s\n", PQgetvalue(res, i, 0));

}

PQclear(res);

PQfinish(conn);

return 0;

}

using Npgsql;

string username = "admin";

string password = "quest";

string database = "qdb";

int port = 8812;

var connectionString = $@"host=localhost;port={port};username={username};password={password};

database={database};ServerCompatibilityMode=NoTypeLoading;";

await using NpgsqlConnection connection = new NpgsqlConnection(connectionString);

await connection.OpenAsync();

var sql = "SELECT x FROM long_sequence(5);";

await using NpgsqlCommand command = new NpgsqlCommand(sql, connection);

await using (var reader = await command.ExecuteReaderAsync()) {

while (await reader.ReadAsync())

{

var x = reader.GetInt64(0);

}

}

require 'pg'

begin

conn =PG.connect( host: "127.0.0.1", port: 8812, dbname: 'qdb',

user: 'admin', password: 'quest' )

rows = conn.exec 'SELECT x FROM long_sequence(5);'

rows.each do |row|

puts row

end

rescue PG::Error => e

puts e.message

ensure

conn.close if conn

end

<?php

function exceptions_error_handler($severity, $message, $filename, $lineno) {

throw new ErrorException($message, 0, $severity, $filename, $lineno);

}

set_error_handler('exceptions_error_handler');

$db_conn = null;

try {

$db_conn = pg_connect(" host = 'localhost' port=8812 dbname = 'qdb' user = 'admin' password = 'quest' ");

$result = pg_query($db_conn, 'SELECT x FROM long_sequence(5);' );

while ($row = pg_fetch_assoc($result) ){

print_r($row);

}

pg_free_result($result);

} catch (Exception $e) {

echo 'Caught exception: ', $e->getMessage(), "\n";

} finally {

if (!is_null($db_conn)) {

pg_close($db_conn);

}

}

?>

PostgreSQL compatibility

QuestDB offers broad compatibility with the PostgreSQL ecosystem.

However, please note some limitations.

Forward-only cursors

QuestDB diverges from PostgreSQL in its handling of cursor commands. While PostgreSQL supports scrollable cursors, enabling backward and forward navigation through the results of a SQL query, QuestDB applies a different approach.

QuestDB does not support scrollable cursors that require explicit creation and

management through DECLARE CURSOR and subsequent operations like FETCH.

Instead, QuestDB supports non-scrollable, or "forward-only", cursors. This

distinction means that while you can iterate over query results sequentially,

you cannot navigate backwards or access result positions as you might with

scrollable cursors in PostgreSQL.

As a result, some PostgreSQL drivers and libraries that rely on scrollable cursors may not be fully compatible with QuestDB. For instance, psycopg2 — a popular PostgreSQL driver for Python — utilizes scrollable cursors extensively. If possible, select drivers that support non-scrollable cursors for optimal compatibility. One such example is asyncpg, which is database driver for asyncio and PostgreSQL.

REST HTTP API

QuestDB exposes a REST API for compatibility with a wide range of libraries and tools.

The REST API is accessible on port 9000 and has the following query-capable

entrypoints:

For details such as content type, query parameters and more, refer to the REST HTTP API reference.

| Entrypoint | HTTP Method | Description | REST HTTP API Reference |

|---|---|---|---|

/exp?query=.. | GET | Export SQL Query as CSV | Reference |

/exec?query=.. | GET | Run SQL Query returning JSON result set | Reference |

/exp: SQL Query to CSV

The /exp entrypoint allows querying the database with a SQL select query and

obtaining the results as CSV.

For obtaining results in JSON, use /exec instead, documented next.

- cURL

- Python

curl -G --data-urlencode \

"query=SELECT * FROM example_table2 LIMIT 3" \

http://localhost:9000/exp

"col1","col2","col3"

"a",10.5,true

"b",100.0,false

"c",,true

import requests

resp = requests.get(

'http://localhost:9000/exp',

{

'query': 'SELECT * FROM example_table2',

'limit': '3,6' # Rows 3, 4, 5

})

print(resp.text)

"col1","col2","col3"

"d",20.5,true

"e",200.0,false

"f",,true

/exec: SQL Query to JSON

The /exec entrypoint takes a SQL query and returns results as JSON.

This is similar to the /exp entry point which returns results as CSV.

Querying Data

- cURL

- Python

- NodeJS

- Go

curl -G \

--data-urlencode "query=SELECT x FROM long_sequence(5);" \

http://localhost:9000/exec

The JSON response contains the original query, a "columns" key with the schema

of the results, a "count" number of rows and a "dataset" with the results.

{

"query": "SELECT x FROM long_sequence(5);",

"columns": [{ "name": "x", "type": "LONG" }],

"dataset": [[1], [2], [3], [4], [5]],

"count": 5

}

import sys

import requests

host = 'http://localhost:9000'

sql_query = "select * from long_sequence(10)"

try:

response = requests.get(

host + '/exec',

params={'query': sql_query}).json()

for row in response['dataset']:

print(row[0])

except requests.exceptions.RequestException as e:

print(f'Error: {e}', file=sys.stderr)

const fetch = require("node-fetch")

const HOST = "http://localhost:9000"

async function run() {

try {

const query = "SELECT x FROM long_sequence(5);"

const response = await fetch(

`${HOST}/exec?query=${encodeURIComponent(query)}`,

)

const json = await response.json()

console.log(json)

} catch (error) {

console.log(error)

}

}

run()

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

"net/url"

)

func main() {

u, err := url.Parse("http://localhost:9000")

checkErr(err)

u.Path += "exec"

params := url.Values{}

params.Add("query", "SELECT x FROM long_sequence(5);")

u.RawQuery = params.Encode()

url := fmt.Sprintf("%v", u)

res, err := http.Get(url)

checkErr(err)

defer res.Body.Close()

body, err := ioutil.ReadAll(res.Body)

checkErr(err)

log.Println(string(body))

}

func checkErr(err error) {

if err != nil {

panic(err)

}

}

Alternatively, the /exec endpoint can be used to create a table and the

INSERT statement can be used to populate it with values:

- cURL

- NodeJS

- Python

# Create Table

curl -G \

--data-urlencode "query=CREATE TABLE IF NOT EXISTS trades(name VARCHAR, value INT)" \

http://localhost:9000/exec

# Insert a row

curl -G \

--data-urlencode "query=INSERT INTO trades VALUES('abc', 123456)" \

http://localhost:9000/exec

# Update a row

curl -G \

--data-urlencode "query=UPDATE trades SET value = 9876 WHERE name = 'abc'" \

http://localhost:9000/exec

The node-fetch package can be installed using npm i node-fetch.

const fetch = require("node-fetch");

const HOST = "http://localhost:9000";

async function createTable() {

try {

const query = "CREATE TABLE IF NOT EXISTS trades (name VARCHAR, value INT)";

const response = await fetch(

`${HOST}/exec?query=${encodeURIComponent(query)}`,

);

const json = await response.json();

console.log(json);

} catch (error) {

console.log(error);

}

}

async function insertData() {

try {

const query = "INSERT INTO trades VALUES('abc', 123456)";

const response = await fetch(

`${HOST}/exec?query=${encodeURIComponent(query)}`,

);

const json = await response.json();

console.log(json);

} catch (error) {

console.log(error);

}

}

async function updateData() {

try {

const query = "UPDATE trades SET value = 9876 WHERE name = 'abc'";

const response = await fetch(

`${HOST}/exec?query=${encodeURIComponent(query)}`,

);

const json = await response.json();

console.log(json);

} catch (error) {

console.log(error);

}

}

createTable().then(insertData).then(updateData);

import requests

import json

host = 'http://localhost:9000'

def run_query(sql_query):

query_params = {'query': sql_query, 'fmt' : 'json'}

try:

response = requests.get(host + '/exec', params=query_params)

json_response = json.loads(response.text)

print(json_response)

except requests.exceptions.RequestException as e:

print("Error: %s" % (e))

# create table

run_query("CREATE TABLE IF NOT EXISTS trades (name VARCHAR, value INT)")

# insert row

run_query("INSERT INTO trades VALUES('abc', 123456)")

# update row

run_query("UPDATE trades SET value = 9876 WHERE name = 'abc'")

Apache Parquet

Apache Parquet support is in beta.

It may not be fit for production use.

Please let us know if you run into issues.

Either:

- Email us at support@questdb.io

- Join our public Slack

- Post on our Discourse community

Parquet files can be read and thus queried by QuestDB.

QuestDB is shipped with a demo Parquet file, trades.parquet, which can be

queried using the read_parquet function.

Example:

SELECT

*

FROM

read_parquet('trades.parquet')

WHERE

side = 'buy';

The trades.parquet file is located in the import subdirectory inside the

QuestDB root directory. Drop your own Parquet files to the import directory and

query them using the read_parquet() function.

You can change the allowed directory by setting the cairo.sql.copy.root

configuration key.

For more information, see the Parquet documentation.

What's next?

Now... SQL! It's query time.

Whether you want to use the Web Console, PostgreSQL or REST HTTP (or both), query construction is rich.

To brush up and learn what's unique in QuestDB, consider the following:

And to learn about some of our favourite, most powerful syntax:

- Window functions are a powerful analysis tool

- Aggregate functions - aggregations are key!

- Date & time operators to learn about date and time

SAMPLE BYto summarize data into chunks based on a specified time interval, from a year to a microsecondWHERE INto compress time ranges into concise intervalsLATEST ONfor latest values within multiple series within a tableASOF JOINto associate timestamps between a series based on proximity; no extra indices required

Looking for visuals?

- Explore Grafana

- Jump quickly into the Web Console