High performance time series database

Superior developer experience with SQL and time series extensions. Speed and reliability to solve ingestion speed bottlenecks.

Get QuestDBPerformance

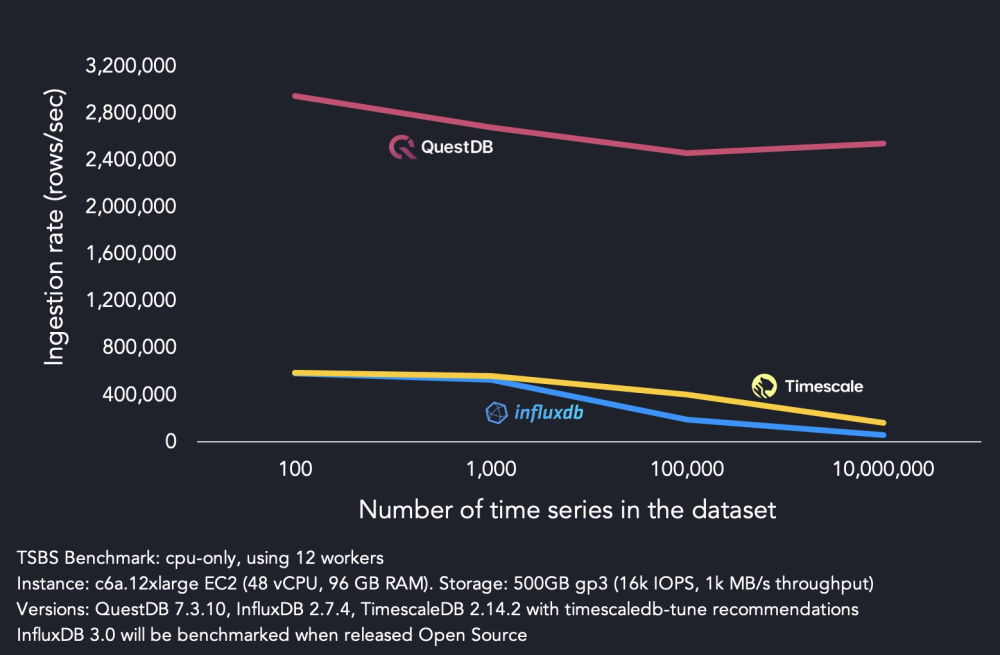

- Ingest 2M rows/s per node

- Up to 10x faster writes vs InfluxDB

- Don’t worry about cardinality

- Columnar storage

- Data partitioned by time

- SIMD-optimized queries

Developer experience

- Open source under Apache 2.0

- Built-in SQL optimizer & REST API

- PostgreSQL driver compatibility

- InfluxDB Line Protocol API

- SQL and time-series joins

- Grafana integration

Enterprise ready

- Cloud & on-prem

- Hot & cold read replicas

- Role-based access control

- Multiple availability zones

- Data compression

- Enterprise support SLA

Try the live demo

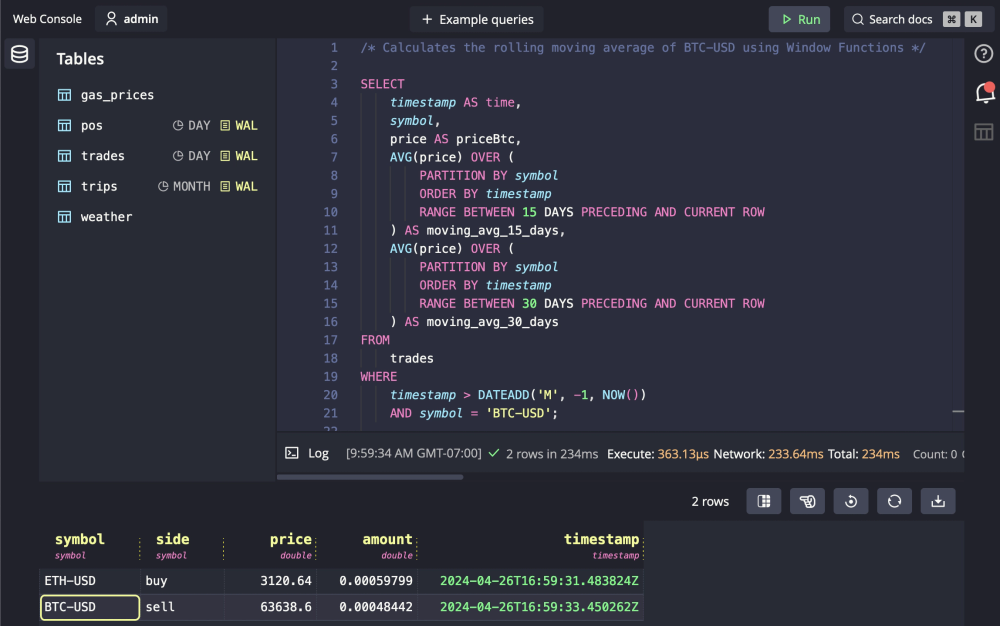

Query over 2 billion rows in milliseconds in the QuestDB Web Console using SQL

Try QuestDB demo in your browser

Peak performance time-series

Hyper ingestion

For massive volumes of time-series data, trust a specialized time-series database. Timestamps are first-class, and blistering throughput is sustained even through the highest data cardinality. Deduplication and out-of-order ingestion also work right out-of-the-box.

“QuestDB outperforms every database we have tested and delivered a 10x improvement in querying speed. It has become a critical piece of our infrastructure.”

QuestDB is a time series database truly built by developers for developers. We found that QuestDB provides a unicorn solution to handle extreme TPS while also offering a simplified SQL programming interface.

Augmented SQL for time series data

ANSI SQL time series extensions made for time stamped data

SELECT timestamp, tempCFROM sensorsWHERE timestamp IN '2021-05-14;1M';

-- Search timeSELECT timestamp, tempCFROM sensorsWHERE timestamp IN '2021-05-14;1M';

SELECT timestamp, avg(tempC)FROM sensorsSAMPLE BY 5m;

-- Slice timeSELECT timestamp, avg(tempC)FROM sensorsSAMPLE BY 5m;

SELECT timestamp, sensorName, tempCFROM sensorsLATEST ON timestamp PARTITION BY sensorName;

-- Navigate timeSELECT timestamp, sensorName, tempCFROM sensorsLATEST ON timestamp PARTITION BY sensorName;

SELECT sensors.timestamp ts, rain1HFROM sensorsASOF JOIN weather;

-- Merge timeSELECT sensors.timestamp ts, rain1HFROM sensorsASOF JOIN weather;

QuestDB is used at Airbus for real-time applications involving hundreds of millions of data points per day. For us, QuestDB is an outstanding solution that meets (and exceeds) our performance requirements.

Available in three flavours

Open Source, Enterprise, Cloud

Deploy QuestDB in the cloud, on-premises or on your laptop.

- Open source

- Always open source. QuestDB is licensed under Apache 2.0, for worry-free, developer-friendly usage.

- QuestDB Cloud

- Affordable managed clusters with replication, backups and monitoring. Trust the expert QuestDB team.

- Enterprise

- Premium features like Role-based Access Control and database replication means scale on your own terms.

Switching to QuestDB was a game-changer for our analytics. With Elasticsearch, we faced slow ingestion and latency issues for queries. With QuestDB, we now process 500M records per day smoothly.